- by SEO

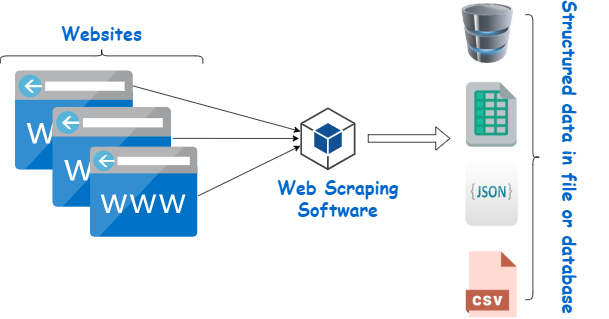

World-wide-web scraping is usually a vital proficiency intended for facts professionals, featuring methods to obtain real-time facts intended for research, developing datasets, in addition to enriching unit finding out products. No matter if you will be getting solution facts, considering purchaser opinions, or maybe pursuing HTML to PDF API personal facts, the chance to correctly scrape world-wide-web facts is usually an crucial software within a facts scientist’s toolkit. Even so, effective world-wide-web scraping is going further than uncomplicated extraction—it involves arranged tactics to ensure the facts is usually fresh, set up, in addition to completely ready intended for research. In this posting, we can examine all very reputable world-wide-web scraping tactics that can heighten computer data variety work in addition to raise ones analytical functionality.

- Studying CSS Selectors intended for Correct Facts Extraction

Essentially the most strong tactics with world-wide-web scraping is usually applying CSS selectors to target unique HTML things. Some sort of CSS selector is usually a sequence connected with personas helpful to distinguish things using a website page dependant on the capabilities like category, no ., or maybe point label. This will give facts professionals to help correctly get necessary . needed while not having to hand search through disorganized Web coding.

One example is, for anyone who is scraping solution bookings by a e-commerce web page, you should utilize CSS selectors to target the goods label, selling price, in addition to account devoid of as well as unnecessary facts including nav food selection or maybe footers. Libraries including BeautifulSoup with Python allow it to become convenient to use CSS selectors to help parse this HTML in addition to get solely the desired facts, which often facilitates streamline this scraping practice in addition to makes sure people stay clear of pointless things.

Case:

python

Content value

by bs4 scan BeautifulSoup

scan needs

Post HTTP demand to discover the webpage information

web site = “https: //example. com/products”

answer = needs. get(url)

soup = BeautifulSoup(response. word, “html. parser”)

Work with CSS selectors to help get solution bands in addition to charges

merchandise = soup. select(‘. product-name’)

charges = soup. select(‘. product-price’)

intended for solution, selling price with zip(products, prices):

print(f”Product: product.text, Selling price: price.text “)

- Controlling Active At ease with Selenium

Current internet websites typically work with JavaScript to help dynamically heap information as soon as the primary webpage heap, which will produce regular scraping instruments including BeautifulSoup or maybe Needs useless. It is in particular common with internet websites of which put into practice endless scrolling, AJAX needs, or maybe interactive things. To manage like active information, Selenium is usually an necessary software.

Selenium is usually a cell phone browser automation software of which means that you can reproduce authentic end user behaviour, like simply clicking on keys, scrolling as a result of websites, or maybe anticipating things to help heap. By means of handling a proper internet browser (like Stainless- or maybe Firefox), Selenium helps you scrape information that is certainly caused to become dynamically, turning it into perfect for internet websites of which to a great extent make use of JavaScript.

Case:

python

Content value

by selenium scan webdriver

by selenium. webdriver. popular. by means of scan By means of

scan time period

Initialize this WebDriver

drivers = webdriver. Chrome()

See a web page

drivers. get(‘https: //example. com/dynamic-content’)

Lose time waiting for things to help heap

time period. sleep(5)

Get active information soon after it truly is caused to become

dynamic_content = drivers. find_elements(By. CLASS_NAME, ‘dynamic-class’)

intended for information with dynamic_content:

print(content. text)

In close proximity this cell phone browser

drivers. quit()

- Correctly Navigating As a result of Pagination

Quite a few internet websites provide facts all over many websites, like listings or maybe solution catalogs. To accumulate each of the facts, it is advisable to correctly cope with pagination—the strategy of navigating as a result of many websites connected with information. In any other case treated adequately, scraping paginated information may result in partial datasets or maybe dysfunctional scraping.

Just one useful technique is usually to distinguish this WEB SITE style as used by this website’s pagination process. In particular, internet websites typically transform this webpage range from the WEB SITE (e. gary the gadget guy., page=1, page=2, for example. ). Then you can programmatically find the way as a result of most of these websites, extracting facts by every within a hook.

Case:

python

Content value

scan needs

by bs4 scan BeautifulSoup

base_url = “https: //example. com/products? page=”

intended for webpage with range(1, 6): # Scrape the primary 5 websites

web site = f”base_url page inch

answer = needs. get(url)

soup = BeautifulSoup(response. word, “html. parser”)

Get facts by just about every webpage

merchandise = soup. select(‘. product-name’)

intended for solution with merchandise:

print(product. text)

- Controlling AJAX in addition to API Needs

World-wide-web scraping is just not on a extracting facts by HTML by itself. Quite a few current internet websites heap facts asynchronously applying AJAX (Asynchronous JavaScript in addition to XML) message or calls or maybe backend APIs. Most of these needs are often used to heap facts dynamically devoid of exhilarating the full webpage, doing these individuals an important supplier intended for set up facts.

To be a facts scientist, you possibly can distinguish most of these AJAX needs by means of inspecting this circle pastime with your browser’s programmer instruments. The moment acknowledged, you possibly can go around the desire to scrape this HTML in addition to specifically admittance this actual API endpoints. This kind of delivers cleanser, far more set up facts, turning it into much better to practice in addition to review.

Case:

python

Content value

scan needs

WEB SITE on the API endpoint

api_url = ‘https: //example. com/api/products’

Post some sort of RECEIVE demand towards API

answer = needs. get(api_url)

Parse this go back JSON facts

facts = answer. json()

Get in addition to present solution specifics

intended for solution with data[‘products’]:

print(f”Product: product[‘name’], Selling price: product[‘price’] “)

- Utilizing Pace Restraining in addition to Sincere Scraping

As soon as scraping substantial quantities of prints connected with facts, it’s vital to put into practice pace restraining avoiding overloading some sort of website’s server in addition to having plugged. World-wide-web scraping can certainly fit a large heap using a web page, especially when many needs are designed with much very less time. Thus, putting delays concerning needs in addition to respecting this website’s spiders. txt data file is not just a honorable process but methods to assure ones IP doesn’t receive suspended.

Together with respecting spiders. txt, facts professionals must evaluate applying instruments including scrapy that supply built-in pace restraining attributes. By means of location ideal hold up situations concerning needs, people ensure that your scraper behaves such as a usual end user in addition to isn’t going to break up this website’s businesses.

Case:

python

Content value

scan time period

scan needs

web site = “https: //example. com/products”

intended for webpage with range(1, 6):

answer = needs. get(f”url? page=page “)

Reproduce human-like hold up

time period. sleep(2) # 2-second hold up concerning needs

print(response. text)

- Facts Clean-up in addition to Structuring intended for Research

If the facts has become scraped, your next critical move is usually facts clean-up in addition to structuring. Fresh facts pulled apart from the web is normally disorganized, unstructured, or maybe partial, making it complicated to handle. Facts professionals have to alter that unstructured facts in a fresh, set up data format, for example a Pandas DataFrame, intended for research.

Over the facts clean-up practice, chores like controlling missing out on prices, normalizing word, in addition to renovating time frame codecs are important. Also, if the facts is usually wiped clean, facts professionals can certainly retail store the results with set up codecs including CSV, JSON, or maybe SQL data source intended for much easier access and further research.

Case:

python

Content value

scan pandas seeing that pd

Make a DataFrame on the scraped facts

facts = ‘Product’: [‘Product A’, ‘Product B’, ‘Product C’],

‘Price’: [19.99, 29.99, 39.99]

df = pd. DataFrame(data)

Fresh facts (e. gary the gadget guy., take out excess personas, alter types)

df[‘Price’] = df[‘Price’]. astype(float)

Spend less wiped clean facts to help CSV

df. to_csv(‘products. csv’, index=False)

Realization

World-wide-web scraping is usually a strong proficiency intended for facts professionals to accumulate in addition to implement facts from the web. By means of studying tactics including CSS selectors, controlling active at ease with Selenium, coping with pagination, in addition to using the services of APIs, facts professionals can certainly admittance high-quality facts intended for research. Also, respecting pace restricts, clean-up, in addition to structuring this scraped facts makes certain that the results is usually completely ready intended for additionally finalizing in addition to modeling. By using most of these tactics, you’ll have the capacity to streamline ones world-wide-web scraping practice in addition to enrich computer data research ability, making you more cost-effective in addition to useful with your data-driven initiatives.